I

In the wintertime of 1958, Frank Rosenblatt, a psychologist who was 30 years old at the time, was traveling from Cornell University to the Office of Naval Research in Washington DC. During his trip, he made a pit stop to grab coffee with a journalist.

During the early stages of computing, Rosenblatt introduced an extraordinary creation that caused quite a commotion. He proclaimed it to be the “first machine capable of generating original ideas.”

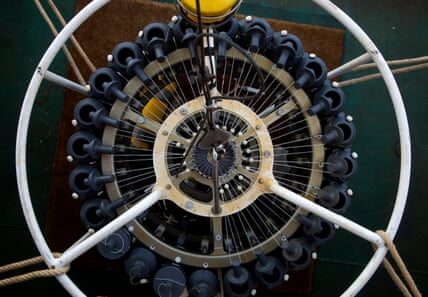

The Perceptron, created by Rosenblatt, was a computer program based on human neurons and operated on a cutting-edge IBM mainframe that weighed five tonnes and was as large as a wall. By inputting a stack of punch cards, the Perceptron could be trained to differentiate between cards marked on the left and those marked on the right. Despite the seemingly mundane task, the machine was capable of learning.

Rosenblatt was optimistic about the future and the New Yorker shared his enthusiasm. According to the journalist, the Perceptron was a significant challenger to the capabilities of the human brain. When questioned about its limitations, Rosenblatt stated that it was unable to emulate emotions such as love, hope, and despair. Essentially, he believed that human nature was beyond the scope of what a machine could comprehend, just as our understanding of the human sex drive is limited.

The Perceptron was the initial neural network, a basic form of the significantly intricate “deep” neural networks that power much of contemporary artificial intelligence (AI).

However, after almost seven decades, there is yet to be a significant competitor to the capabilities of the human brain. According to Professor Mark Girolami, the chief scientist at the Alan Turing Institute in London, the current advancements in artificial intelligence are comparable to “parrots,” which can mimic human speech but are not on the same level as human intelligence. While these developments are valuable for the betterment of society, it is important to not exaggerate their capabilities.

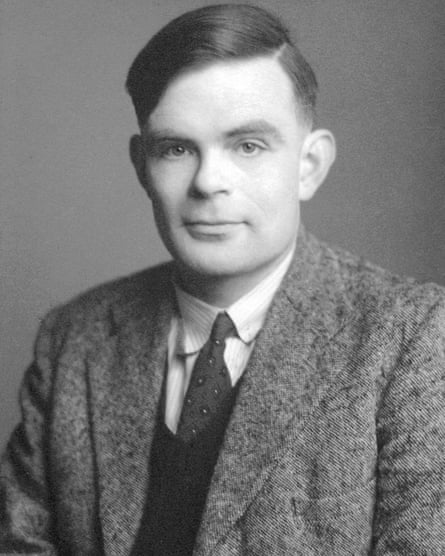

The origins of AI, as documented currently, have numerous individuals credited as pioneers. Several are recognized as the creators of deep learning, including Rosenblatt alongside three others. Alan Turing, known for his role as a codebreaker during the war and his contributions to computer science, is also acknowledged as a key figure in the development of AI. He was among the first to seriously consider the concept of computers possessing intelligence.

In his 1948 publication titled “Intelligent Machinery,” Turing examined the potential for machines to imitate intelligent actions. He suggested that one approach to creating a “thinking machine” would be to replace human body parts with machinery, such as using cameras for eyes and microphones for ears, along with an electronic brain. Turing also joked that for the machine to learn on its own, it should be able to explore its surroundings. However, he acknowledged that this idea would not be feasible due to its slow and impractical nature, posing a danger to everyday citizens.

However, numerous concepts proposed by Turing have endured. He suggested that machines could acquire knowledge similar to how children do, through the use of rewards and consequences. Additionally, certain machines could adapt by altering their own programming. These days, machine learning, rewards, and modifications are fundamental principles in the field of AI.

Turing suggested the Imitation Game, also known as the Turing test, as a method for measuring advancements in artificial intelligence. This test evaluates whether a person can differentiate between written conversations from a human or a machine.

The test is clever, but trying to pass it has caused a lot of confusion. In a recent case, researchers surprised many by claiming to pass the test with a chatbot pretending to be a 13-year-old from Ukraine with a guinea pig that could play Beethoven’s Ode to Joy.

Girolami notes that Turing made a significant impact on AI that is frequently disregarded. In a declassified document from his tenure at Bletchley Park, it was revealed that he utilized Bayesian statistics to decipher coded messages. Through this approach, Turing and his colleagues were able to determine the likelihood of a specific German word producing a given set of encrypted letters.

The use of a Bayesian approach has led to the development of generative AI programs that can create essays, artwork, and images of non-existent people. According to Girolami, there has been a significant amount of work on Bayesian statistics in the past 70 years that has paved the way for the current capabilities of generative AI. This can be traced back to Turing’s work on encryption.

The phrase “artificial intelligence” was first mentioned in 1955 by John McCarthy, a computer scientist from Dartmouth College in New Hampshire. He used it in a proposal for a summer school and held high hopes for its potential advancements.

He expressed that a notable progress could be achieved if a group of scientists, carefully chosen, collaborate on it during the summer.

Dr. Jonnie Penn, an associate teaching professor of AI ethics at the University of Cambridge, states that this is the era after the war. The US government believed that nuclear weapons were responsible for winning the war, so the importance and advancement of science and technology were at its peak.

The attendees at the event did not make much progress. However, scientists took advantage of this time to develop advanced programs and sensors that allowed computers to understand and react to their surroundings, solve tasks, and handle human language.

In 1970, Life magazine interviewed Marvin Minsky from the Massachusetts Institute of Technology, a prominent figure in AI. Minsky predicted that within three to eight years, a computerized robot with the same level of intelligence as an average human would exist. This robot would be able to understand English instructions and perform tasks on clunky cathode-ray tube monitors. Additionally, labs were showcasing robots that could move around and bump into objects like desks and filing cabinets. Minsky also believed that this advanced robot would be capable of reading Shakespeare, performing car maintenance, making jokes, navigating office politics, and even engaging in physical altercations. It was believed that through self-teaching, this robot’s abilities would be immeasurable in just a few months.

In the 1970s, the bubble of AI burst after Sir James Lighthill, a renowned mathematician in the UK, published a harsh report on its limited advancement, leading to sudden reductions in funding.

A resurgence occurred as a fresh group of scientists recognized knowledge as the key to addressing AI’s problems.

Their goal was to program human knowledge directly into computers. The most ambitious attempt, known by various names, was Cyc. It sought to contain all the information that a well-educated individual would use in their everyday activities.

This involved programming on the spot, but obtaining guidance from specialists on their decision-making methods – and programming the data into a computer – proved to be significantly more challenging than scientists had anticipated.

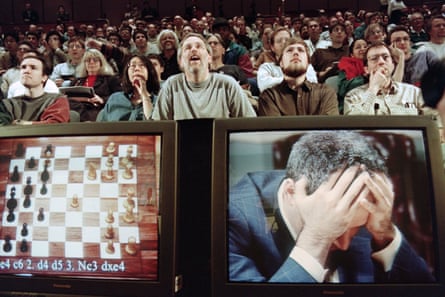

AI in the 20th century achieved significant accomplishments. One such success was in 1997 when IBM’s Deep Blue defeated chess grandmaster Garry Kasparov. The event gained widespread attention, with Newsweek declaring it as “The Brain’s Last Stand”.

In a game, Deep Blue analyzed 200 million positions per second and predicted up to 80 moves in advance. Kasparov remarked that the machine played exceptionally well.

According to Matthew Jones, a history professor at Princeton University and co-author of the book How Data Happened in 2023, the development of AI was a final attempt at a more conventional approach.

In the real world, problems are more complicated as there may be unclear rules and missing information. Unlike humans, a chess-playing AI is unable to perform tasks such as planning your day, cleaning the house, or driving a car. According to Prof Eleni Vasilaki from the University of Sheffield, using chess as a benchmark for artificial intelligence may not be the most suitable approach.

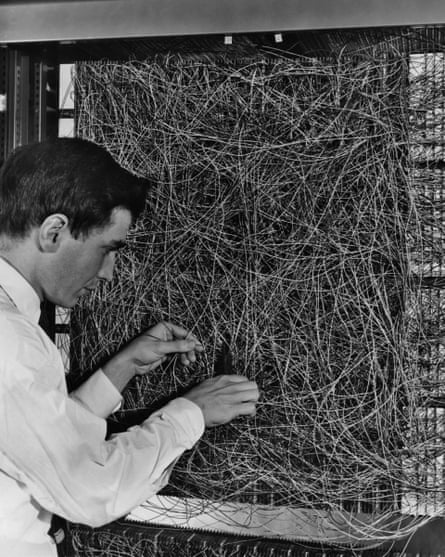

After Deep Blue, the most notable advancements in AI have been made using a different method inspired by Rosenblatt and his Perceptron, which was used for card sorting. Single-layered neural networks based on the Perceptron were not very practical due to inherent limitations. However, scientists were aware that multi-layered neural networks would be much more successful. The main obstacles were a lack of computing power and understanding of how to properly train them.

In 1986, a significant discovery was made by researchers, specifically Geoffrey Hinton from Carnegie Mellon University. They created a method known as “backpropagation” to train networks. This allowed for entire layers to communicate with each other instead of individual “neurons” only communicating with their neighboring ones.

Imagine you create a neural network to categorize pictures of kittens and puppies. The network receives an image and processes it through its various layers. Each layer examines different characteristics, such as edges, outlines, fur, and faces, and transmits results to the next layer. In the last layer, the neural network computes the probability of the image being a cat or a dog. However, if the network makes a mistake, for example by thinking Rover is wearing a bell, you can determine the error size and backtrack through the network to adjust the weights of the neurons – essentially the strength of the connections – in order to minimize the error. This process is repeated multiple times and is how the network learns.

The discovery brought neural networks back into the spotlight, but once again, researchers were hindered by limited computing power and data. However, in the 2000s, advancements in processors, specifically graphics processing units used in video gaming, and the abundance of data on the internet (including words, images, and audio) changed the game. In 2012, there was another major breakthrough when scientists proved the immense power of “deep” neural networks with multiple layers. Hinton and other researchers introduced AlexNet, an eight-layered network with approximately 10,000 neurons, which outperformed its competitors in the ImageNet challenge – an international competition that tests AI’s ability to recognize images from a database of millions.

According to Professor Mirella Lapata, a specialist in natural language processing at the University of Edinburgh, the introduction of AlexNet was a pivotal moment in understanding the significance of scale. Previously, it was believed that simply inputting human knowledge into a computer would enable it to perform tasks. However, the perspective has changed, with emphasis now placed on computation and scale as crucial factors.

Following the success of AlexNet, there was a rapid succession of accomplishments. In 2010, Google’s DeepMind was established with the goal of solving intelligence. They presented an algorithm that was able to learn and excel at classic Atari games without any prior knowledge. Through trial and error, it discovered a winning strategy for Breakout by creating a pathway through one side of the wall and sending the ball into the empty space behind it. Another DeepMind algorithm, AlphaGo, defeated the Go champion Lee Sedol in the Chinese board game. The company has also released AlphaFold, which uses its knowledge of protein shapes and chemical composition to predict 3D structures for over 200 million proteins, covering almost all known proteins in science. These structures are now revolutionizing the field of medical science.

Numerous attention-grabbing titles arose from the development of deep learning, but they pale in comparison to the massive impact of generative AI. These advanced tools, such as OpenAI’s ChaptGPT released in 2022, are known for their ability to create a variety of content, including essays, poems, job application letters, artworks, movies, and classical music.

The core of generative AI is powered by a transformer, created by Google researchers with the initial purpose of enhancing translation. Its 2017 publication, titled “Attention Is All You Need,” is a reference to a popular Beatles song. The creators themselves may not have anticipated its significant impact.

The co-author of the paper, Llion Jones, who also came up with the title, has recently departed from Google to start a new company called Sakana AI. Speaking from his office in Tokyo, where he was conducting a new transformer experiment, he looked back on the paper’s reception. “We originally intended for our creation to be quite versatile, rather than specifically for translation. But we never anticipated just how widely it would be used and adopted,” he remarks. “Transformers are now utilized in almost everything.”

Prior to the implementation of transformers, AI-based translation models typically acquired language skills by analyzing individual words in a sentence sequentially. This method has its limitations as it is time-consuming and does not perform well with longer sentences. By the time the model reaches the end of a sentence, it may have already forgotten the beginning. However, transformers address these issues by utilizing a technique known as attention. This allows the model to process all words in a sentence simultaneously and comprehend each word in relation to the others within the sentence.

OpenAI’s GPT – standing for “generative pre-trained transformer” – and similar large-language models can churn out lengthy and fluent, if not always wholly reliable, passages of text. Trained on enormous amounts of data, including most of the text on the internet, they learn features of language that eluded previous algorithms.

One of the most notable and thrilling capabilities of transformers is their ability to tackle a wide range of tasks. By understanding the characteristics of various forms of data, such as music, videos, images, and speech, they can be prompted to generate even more. Unlike traditional neural networks, which require separate models for different types of media, transformers are adaptable to all types of data.

According to Michael Wooldridge, a computer science professor at the University of Oxford and author of The Road to Conscious Machines, this marks a significant advancement in technology. He believes that Google did not anticipate the potential of this development and would not have published the paper if they had known its wide-reaching impact on artificial intelligence.

Wooldridge envisions the use of CCTV for spotting crimes in real time through transformer networks. He also predicts a future where generative AI can bring back deceased celebrities like Elvis and Buddy Holly. Fans of the original Star Trek series could potentially have an unlimited supply of episodes created by generative AI with voices resembling William Shatner and Leonard Nimoy. The distinction between real and artificial voices would become indistinguishable.

However, there is a price to pay for revolution. Developing models such as ChatGPT consumes a significant amount of computational resources and contributes to carbon emissions. According to Penn, generative AI has led us towards a damaging impact on the environment. Instead of constantly relying on AI, let us utilize it for practical purposes and avoid wasting resources on unnecessary tasks.

Source: theguardian.com